R Loss Function

- Customized loss function. This tutorial provides guidelines for using customized loss function in network construction. Model Training Example. Let's begin with a small regression example. We can build and train a regression model with the following code.

- A loss function is a measure of how good a prediction model does in terms of being able to predict the expected outcome. A most commonly used method of finding the minimum point of function is “gradient descent”.

A loss function is a mapping ℓ: Y×Y → R+(sometimes R×R → R+). For example, in binary classification the 0/1 loss function ℓ(y,p)=I(y ̸= p) is often used and in regression the squared error loss function ℓ(y,p)=(y − p)2is often used. Def speciallossfunction(ytrue, ypred, rewardifcorrect, punishmentiffalse): loss = if binary classification is correct apply reward for that training item in accordance with the weight if binary classification is wrong, apply punishment for that training item in accordance with the weight ) return K.mean(loss, axis=-1). Logarithmic Loss, or simply Log Loss, is a classification loss function often used as an evaluation metric in kaggle competitions. Since success in these competitions hinges on effectively minimising the Log Loss, it makes sense to have some understanding of how this metric is calculated and how it should be.

Logarithmic Loss, or simply Log Loss, is a classification loss function often used as an evaluation metric in kaggle competitions. Since success in these competitions hinges on effectively minimising the Log Loss, it makes sense to have some understanding of how this metric is calculated and how it should be interpreted.

Log Loss quantifies the accuracy of a classifier by penalising false classifications. Minimising the Log Loss is basically equivalent to maximising the accuracy of the classifier, but there is a subtle twist which we’ll get to in a moment.

In order to calculate Log Loss the classifier must assign a probability to each class rather than simply yielding the most likely class. Mathematically Log Loss is defined as

where N is the number of samples or instances, M is the number of possible labels, is a binary indicator of whether or not label j is the correct classification for instance i, and is the model probability of assigning label j to instance i. A perfect classifier would have a Log Loss of precisely zero. Less ideal classifiers have progressively larger values of Log Loss. If there are only two classes then the expression above simplifies to

Note that for each instance only the term for the correct class actually contributes to the sum.

Log Loss Function

Let’s consider a simple implementation of a Log Loss function:

Suppose that we are training a binary classifier and consider an instance which is known to belong to the target class. We’ll have a look at the effect of various predictions for class membership probability.

In the first case the classification is neutral: it assigns equal probability to both classes, resulting in a Log Loss of 0.69315. In the second case the classifier is relatively confident in the first class. Since this is the correct classification the Log Loss is reduced to 0.10536. The third case is an equally confident classification, but this time for the wrong class. The resulting Log Loss escalates to 2.3026. Relative to the neutral classification, being confident in the wrong class resulted in a far greater change in Log Loss. Obviously the amount by which Log Loss can decrease is constrained, while increases are unbounded.

Looking Closer

Let’s take a closer look at this relationship. The plot below shows the Log Loss contribution from a single positive instance where the predicted probability ranges from 0 (the completely wrong prediction) to 1 (the correct prediction). It’s apparent from the gentle downward slope towards the right that the Log Loss gradually declines as the predicted probability improves. Moving in the opposite direction though, the Log Loss ramps up very rapidly as the predicted probability approaches 0. That’s the twist I mentioned earlier.

Log Loss heavily penalises classifiers that are confident about an incorrect classification. For example, if for a particular observation, the classifier assigns a very small probability to the correct class then the corresponding contribution to the Log Loss will be very large indeed. Naturally this is going to have a significant impact on the overall Log Loss for the classifier. The bottom line is that it’s better to be somewhat wrong than emphatically wrong. Of course it’s always better to be completely right, but that is seldom achievable in practice! There are at least two approaches to dealing with poor classifications:

- Examine the problematic observations relative to the full data set. Are they simply outliers? In this case, remove them from the data and re-train the classifier.

- Consider smoothing the predicted probabilities using, for example, Laplace Smoothing. This will result in a less “certain” classifier and might improve the overall Log Loss.

Code Support for Log Loss

Using Log Loss in your models is relatively simple. XGBoost has logloss and mlogloss options for the eval_metric parameter, which allow you to optimise your model with respect to binary and multiclass Log Loss respectively. Both metrics are available in caret‘s train() function as well. The Metrics package also implements a number of Machine Learning metrics including Log Loss.

The post Making Sense of Logarithmic Loss appeared first on Exegetic Analytics.

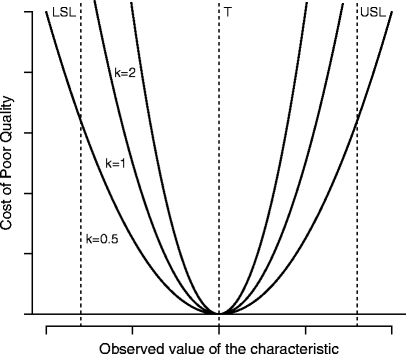

Evaluate regularized precision under various loss functions

Function that evaluates an estimated and possibly regularized precisionmatrix under various loss functions. The loss functions are formulated inprecision terms. This function may be used to estimate the risk (vis-a-vis,say, the true precision matrix) of the various ridge estimators employed.

Usage

Arguments

Estimated (possibly regularized) precision matrix.

R Loss Function Excel

True (population) covariance or precision matrix.

A logical indicating if T is a precision matrix.

A character indicating which loss function is to be used.Must be one of: 'frobenius', 'quadratic'.

Details

Let (mathbf{Omega}) denote a generic ((p times p)) population precision matrix and let(hat{mathbf{Omega}}(lambda)) denote a generic ridge estimator of the precision matrix undergeneric regularization parameter (lambda) (see also ridgeP). The function thenconsiders the following loss functions:

Squared Frobenius loss, given by: $$ L_{F}[hat{mathbf{Omega}}(lambda), mathbf{Omega}] = hat{mathbf{Omega}}(lambda) - mathbf{Omega} _{F}^{2}; $$

Quadratic loss, given by: $$ L_{Q}[hat{mathbf{Omega}}(lambda), mathbf{Omega}] = hat{mathbf{Omega}}(lambda) mathbf{Omega}^{-1} - mathbf{I}_{p} _{F}^{2}. $$

The argument T is considered to be the true precision matrix when precision = TRUE.If precision= FALSE the argument T is considered to represent the true covariance matrix.This statement is needed so that the loss is properly evaluated over the precision, i.e., dependingon the value of the logical argument precision inversions are employed where needed.

The function can be employed to assess the risk of a certain ridge precision estimator (see also ridgeP).The risk (mathcal{R}_{f}) of the estimator (hat{mathbf{Omega}}(lambda)) given a loss function (L_{f}),with (f in {F, Q}) can be defined as the expected loss: $$ mathcal{R}_{f}[hat{mathbf{Omega}}(lambda)] = mathrm{E}{L_{f}[hat{mathbf{Omega}}(lambda), mathbf{Omega}]}, $$which can be approximated by the mean or median of losses over repeated simulation runs.

Value

Function returns a numeric representing the loss under thechosen loss function.

References

van Wieringen, W.N. & Peeters, C.F.W. (2016). Ridge Estimationof Inverse Covariance Matrices from High-Dimensional Data, ComputationalStatistics & Data Analysis, vol. 103: 284-303. Also available asarXiv:1403.0904v3 [stat.ME].

See Also

covML, ridgeP

Aliases

- loss

R Squared Loss Function

Examples

R Loss Function

Documentation reproduced from package rags2ridges, version 2.2.4, License: GPL (>= 2)Loss Function In R